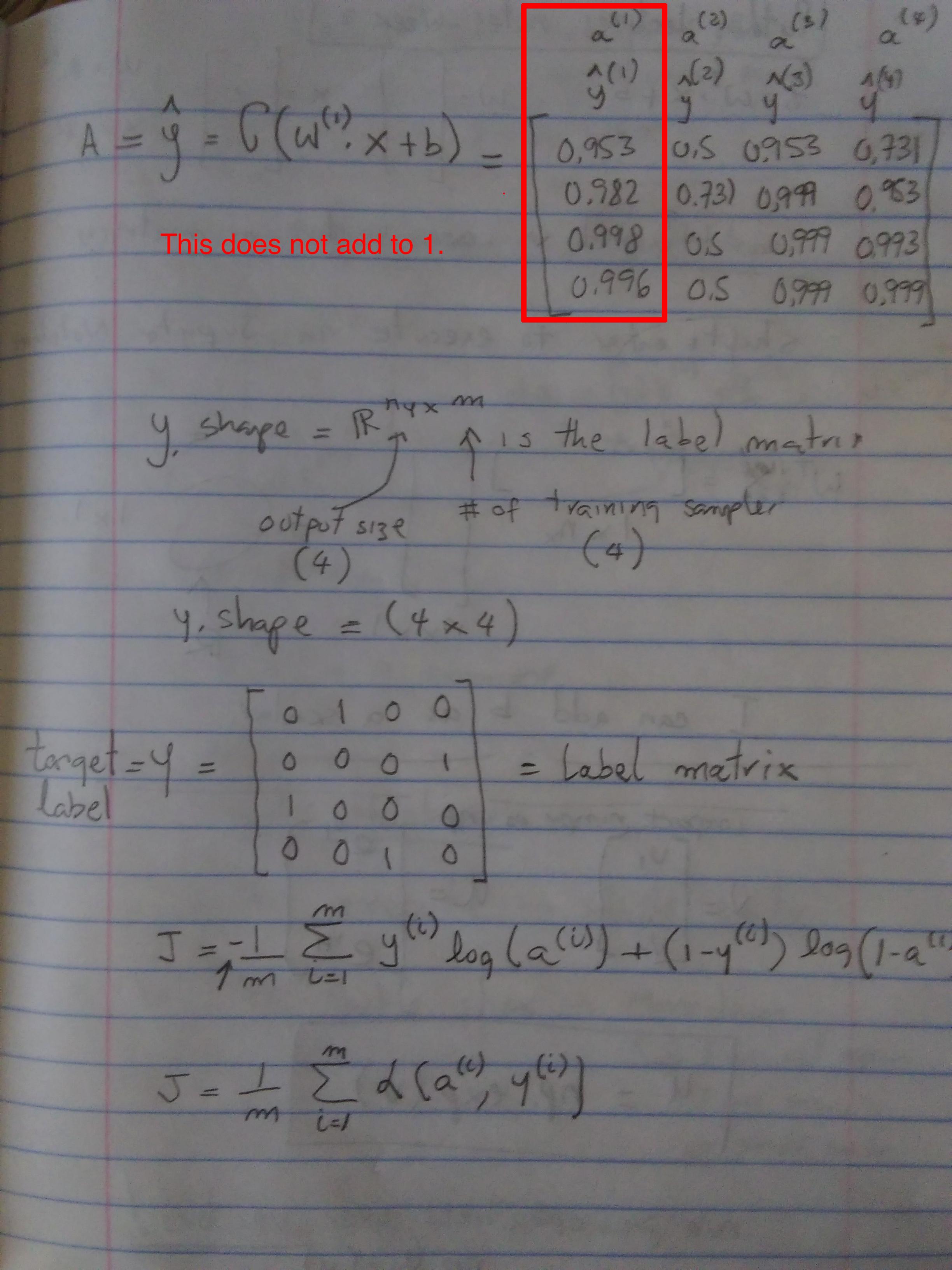

Softmax does not add 1

Posted on December 29, 2018

Response:

Paul T Mielke - Mentor

You are not using softmax. You are just using sigmoid. That's not the same thing. sigmoid is the degenerate case of softmax if there is only one output class, but for n > 1 the formula is different.

This was covered in the first numpy practice exercise in Week 2. Prof Ng will also go into more detail and actually use softmax in the second course, so stay tuned for that.

Softmax function in Wikipedia